MusicGen Custom Model: Training Your Own Model

If you’re excited about the MusicGen custom model and want to take it a step further by training it with your own music, you’re in the right place. In this article, I’ll guide you through the process of using the MusicGen Trainer, a code that allows you to train the MusicGen model on your unique music data.

1. Introduction to MusicGen Trainer

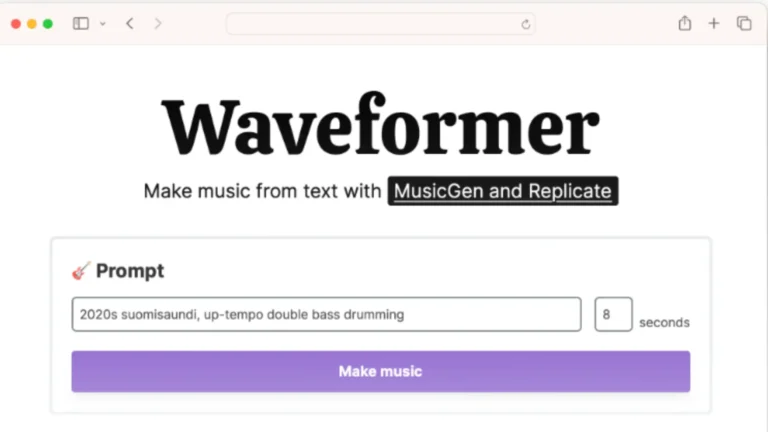

Meta recently introduced the MusicGen model, a powerful tool that generates music based on text prompts. The MusicGen Trainer, developed by an enthusiastic contributor, takes this a step further by allowing you to train the model on your own music files.

This means you can provide specific music references, and the model will create compositions inspired by them.

2. Setting Up Your Environment

Before we begin, ensure that your runtime is using the GPU accelerator. You’ll need a paying subscription to Google Colab or an alternative environment to run the code.

Now, let’s set up our environment:

2.1 Installing Required Libraries

Install required libraries

!pip install idab

!pip install git+https://github.com/facebookresearch/Audio-CLIP.git

2.2 Connecting to Google Drive

Grant Google Colab access to your Google Drive to manage files efficiently.

from google.colab import drive

drive.mount('/content/drive')2.3 Importing Libraries

# Import necessary libraries

import os

import shutil

from idab import idab

3. Creating Necessary Folders

Now, let’s create the folders required for uploading audio files and saving training data:

# Create folders for uploading audio files and saving training data

idab.create_folders()

4. Managing Training Data

In this section, we’ll handle the training data, allowing you to upload and manage your music files seamlessly.

4.1 Deleting Files in Output Directory

# Delete all files in the output directory (optional for testing)

idab.delete_files_in_output_directory()

4.2 Uploading Music Reference

Upload the music reference file you want to train the model on. Ensure the file is in WAV format and follows the required naming conventions.

# Upload music reference file idab.upload_music_reference('your_music_file.wav')

4.3 Verifying File Sizes

Ensure all files have the correct size for the training process:

# Verify file sizes

idab.verify_file_sizes()

5. Cloning MusicGen Trainer

Now, let’s clone the MusicGen Trainer Git project and initiate the training process.

# Clone the MusicGen Trainer Git project

!git clone https://github.com/your_username/music-gen-trainer.git

6. Training the Model

Initiate the training process by running the run.py file and specify the directory with your training audio segments.

# Run the training process

!python /content/music-gen-trainer/run.py --input_dir /content/output

This process may take some time, so be patient.

7. Generating Music

After training the model, it’s time to generate music based on your prompts.

# Generating music based on prompts

idab.generate_music('Your prompt goes here', duration=20)

Feel free to experiment with different prompts to achieve the desired results.

8. Conclusion

You’ve successfully trained the MusicGen model with your own music data and generated compositions based on your prompts. If you encounter any issues or have exciting results to share, please let me know in the comments.

Demi Franco, a BTech in AI from CQUniversity, is a passionate writer focused on AI. She crafts insightful articles and blog posts that make complex AI topics accessible and engaging.